|

|

Post by csnavywx on Jan 31, 2014 17:17:40 GMT -6

As an example of why percentages do not work with temperature comparisons:

Pluto receives approximately 1/1000th the amount of energy per unit of surface area that the Earth does. Yet, its temperature averages 44K. The Earth only manages 288K with 1000 times the energy per unit area AND an atmosphere.

|

|

|

|

Post by guyfromhecker on Jan 31, 2014 19:55:25 GMT -6

Oh crap. bad math again. OK, the lowest is -204C add that to 20c. OK that kicks the 0.7c+ to a whopping 0.31%. DONE Anyway, that is slight in my book. Using a percentage for temperature change isn't really a valid way to do comparisons. The reason is due to the Stefan-Boltzmann law, which states that the amount of energy being emitted by a blackbody (of which the Earth is a reasonably close approximate) is proportional to its temperature to the fourth power. In other words, it doesn't take much energy to get from microwave background temperatures (~3K) to say... 50K. It takes a heck of a lot more to get from 200K to 250K (Kelvin = Celsius + 273). Using percentages to do comparisons in this case simply does not work (at all). Using the Stefan-Boltzmann law (the wikipedia link there actually lays out the entire calculation from start to finish) and an albedo adjustment because the earth isn't a perfect blackbody (again the .7, but this time to the fourth root), it gets you an effective temperature of 255K for Earth (or -18C). This would be the equilibrium temperature of Earth if it had no atmosphere. Tyndall gasses (such as water vapor, carbon dioxide and methane) partially re-radiate outgoing longwave radiation, this keeps the Earth warmer than it otherwise would be (at around 15C or 288K). Aye, but as you said once you get an atmosphere.......things get to the point that they magnify the effect of the sun. In other words it does not take as much energy as it would have without it. BTW, my using the whole scale was about as silly as pointing out the solar output varies by 0.25WPM and calling it slight. That is using 0-240WPM to get that figure. I can tell you those first 238-239 or so wouldn't do well for man. At least not comfy. Now a question. Can you get me graphic stuff of the 0.25WPM swings captured since the satellites went up? |

|

|

|

Post by csnavywx on Jan 31, 2014 21:47:31 GMT -6

|

|

|

|

Post by csnavywx on Jan 31, 2014 21:50:45 GMT -6

|

|

|

|

Post by guyfromhecker on Feb 1, 2014 11:37:07 GMT -6

Now on the serious stuff. Would you agree that, on average, solar output was around 1366/239 for the period 1975-2005? |

|

|

|

Post by csnavywx on Feb 1, 2014 12:23:04 GMT -6

Now on the serious stuff. Would you agree that, on average, solar output was around 1366/239 for the period 1975-2005? For solar irradiance, as an average for that 30 year period, yes. (Careful with that Socratic Method!) |

|

|

|

Post by guyfromhecker on Feb 1, 2014 13:23:25 GMT -6

Now on the serious stuff. Would you agree that, on average, solar output was around 1366/239 for the period 1975-2005? For solar irradiance, as an average for that 30 year period, yes. (Careful with that Socratic Method!) OK, then with the best collection of data to date we would go with a range of maybe 1363.4, back in Muander minumum, when it was easy to average since there were no peaks to speak of, to the 30 year average of 1366. This was probably also pretty much the average from about 1940-2000 or so. I got my figures from hereBTW, don't worry. The Socratic method won't hurt if you are sound in your science. Don't be afraid. Real logic is seldom fatal, LOL Right now your only escape is to disagree with data I show you. That is all I am doing here. He he he |

|

|

|

Post by guyfromhecker on Feb 1, 2014 15:41:53 GMT -6

OK, I'll cut to the chase and spare you some time. This is all about how I read the solar data. Like I said I am using the data from hereIf the above did not work citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.393.1008&rep=rep1&type=pdfCheck it out and follow the flow. From the mid 1600s to the early 1700s solar forcing was as weak as we have seen it. It was cold. The values were in the sub 1364 range. They rebounded to above 1365 and all was a getting better, for a bit. They dipped in the early 1800s and the little ice age continued. Finally the sun woke up and jumped up to about a 1365 average again during the last half of the 19th century into the 20th and we escaped the little ice age. It seems all would have been well then, I mean this 1365 was adequate to bring us out of the freezer and make things nice. One problem. The sun decides to crank up to 1366 for a century. Oh boy, look what that did. All this climate change from about a 0.2% total variation. This is really why we need a steady sun, relatively speaking. If it dipped below 1363 for any period of time we would be screwed with a capital S. If it stayed below 1364 for more than two cycles it would hurt a lot. If it jumped up to 1367, God knows what would happen. We live in a place that is incredibility unique. We are just the right distance from just the right power source. Of course it isn't always pretty, but the last 10,000 years or so have been liveable. There are no guarantees that comfort will always be there since the optimum is about impossible to hold with the little variation that is natural. My best guess with where we are in the Milankovitch stuff is that an optimum now would be somewhere around 1365. I would imagine that optimum varies in diffent times. |

|

|

|

Post by csnavywx on Feb 1, 2014 18:06:53 GMT -6

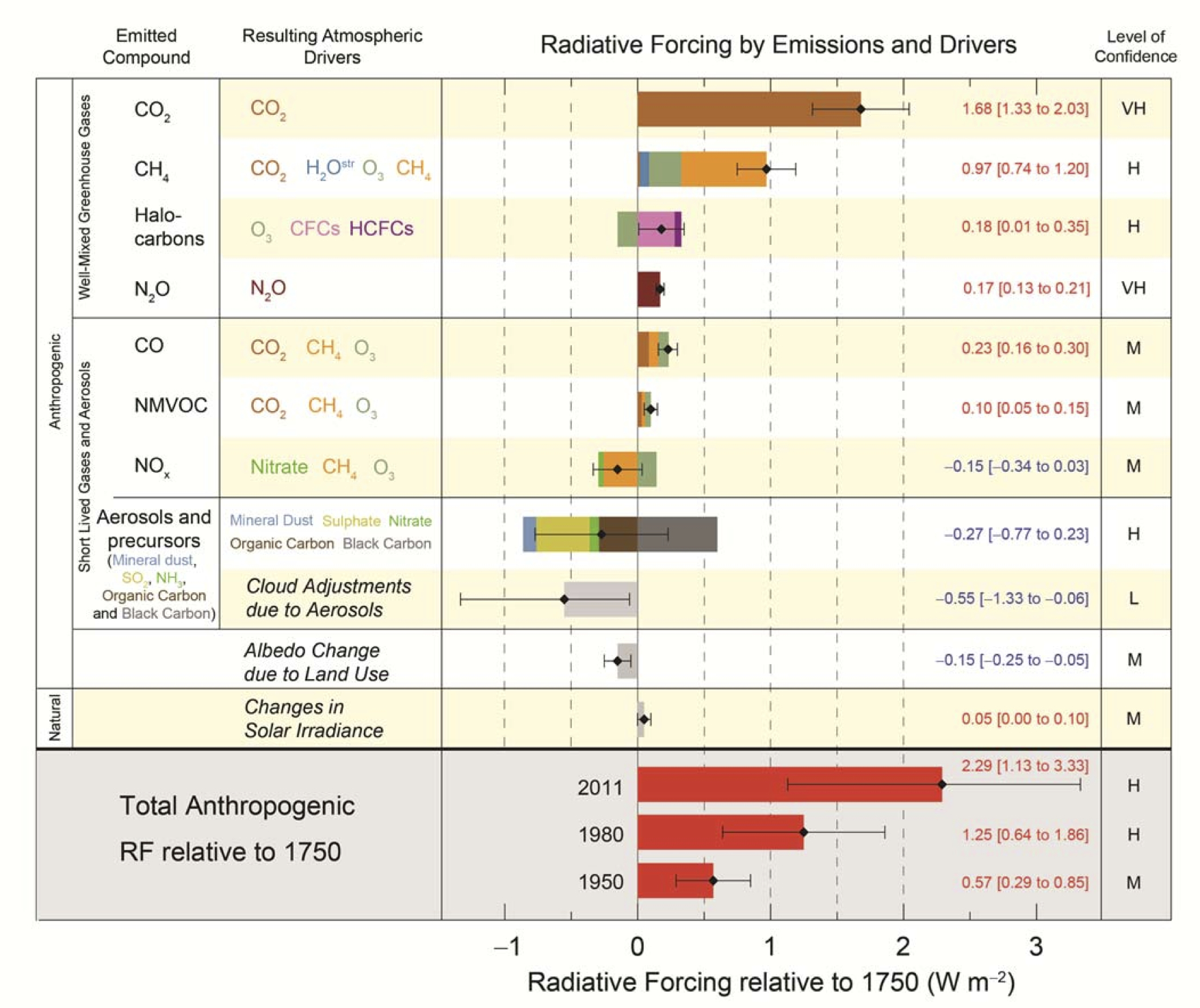

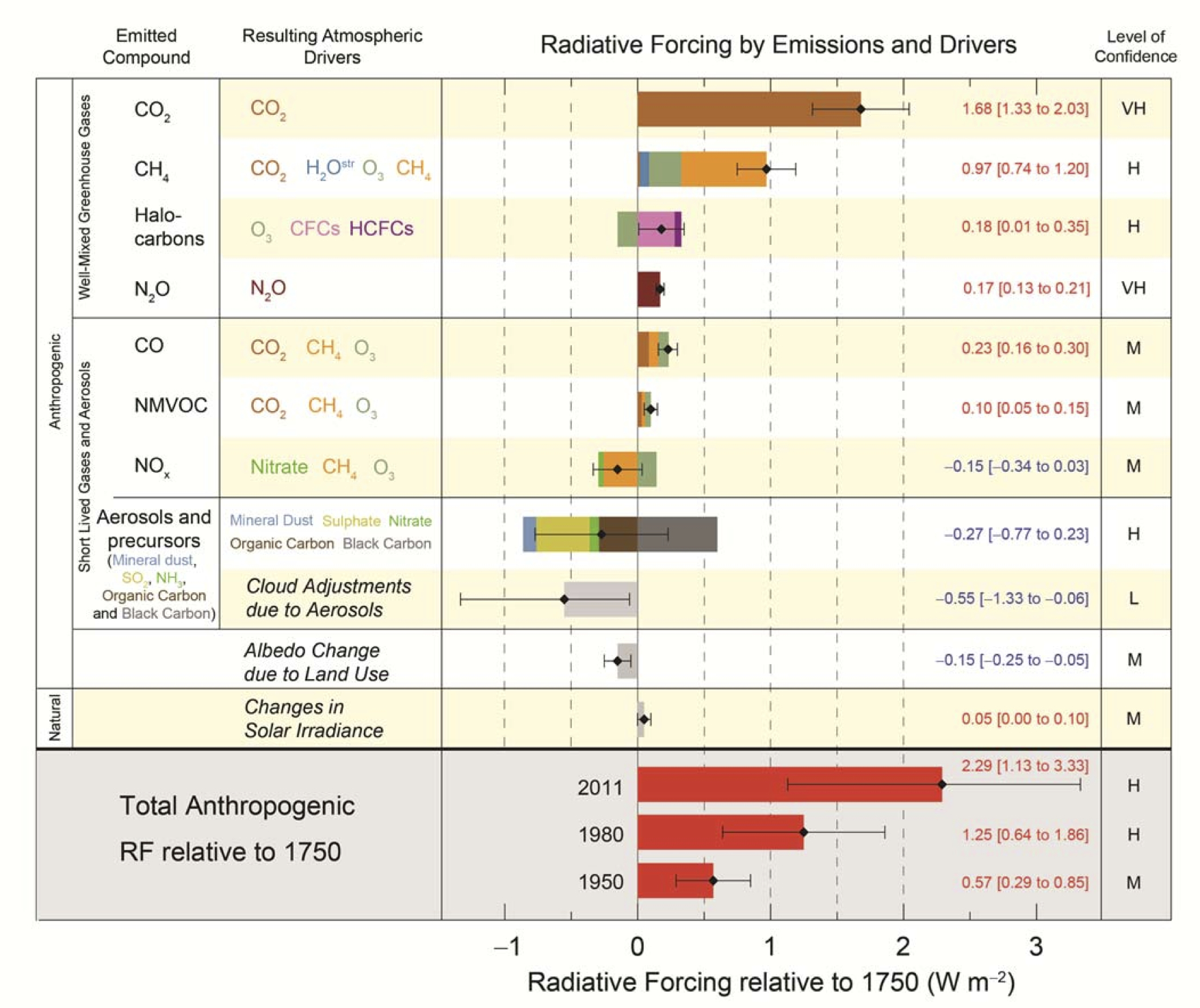

OK, I'll cut to the chase and spare you some time. This is all about how I read the solar data. Like I said I am using the data from hereIf the above did not work citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.393.1008&rep=rep1&type=pdfCheck it out and follow the flow. From the mid 1600s to the early 1700s solar forcing was as weak as we have seen it. It was cold. The values were in the sub 1364 range. They rebounded to above 1365 and all was a getting better, for a bit. They dipped in the early 1800s and the little ice age continued. Finally the sun woke up and jumped up to about a 1365 average again during the last half of the 19th century into the 20th and we escaped the little ice age. It seems all would have been well then, I mean this 1365 was adequate to bring us out of the freezer and make things nice. One problem. The sun decides to crank up to 1366 for a century. Oh boy, look what that did. All this climate change from about a 0.2% total variation. This is really why we need a steady sun, relatively speaking. If it dipped below 1363 for any period of time we would be screwed with a capital S. If it stayed below 1364 for more than two cycles it would hurt a lot. If it jumped up to 1367, God knows what would happen. We live in a place that is incredibility unique. We are just the right distance from just the right power source. Of course it isn't always pretty, but the last 10,000 years or so have been liveable. There are no guarantees that comfort will always be there since the optimum is about impossible to hold with the little variation that is natural. My best guess with where we are in the Milankovitch stuff is that an optimum now would be somewhere around 1365. I would imagine that optimum varies in diffent times. Using our earlier equation to solve for the radiative forcing gives us an almost even 0.5 W/m2. Clearly significant, and as you've pointed out, it does make a difference in temperature. How does this 0.5 compare to other forcings? (Note that the timeframe on this chart is from 1750-2010, and 2010 when the sun was just coming off a deep min, hence the low value of solar forcing over the period).  Wait, what? 2.29 W/m2 increase from CO2 forcing and other gasses alone? Yeowch. Well, let's convert that back into an equivalent of solar irradiance, then compare. ((2.29)*4)/0.7 (to get rid of spherical geometry and take the albedo adjustment back out of the equation) = 13.08 W/m2. That's over 5 times the change from the bottom of the Maunder Minimum to today. Even if you're an extreme optimist and take the lower 5% level, we're still talking over twice the effect. If you're more of a pessimist and take the upper 5% confidence bound, it's 9 times as much. This is why scientists who work on the subject are worried. The reason I was saying to "be careful" is that there's an inevitable conclusion that if you argue for very high sensitivity from solar irradiance changes (solar radiative forcing). This means the climate is also very sensitive to other sources of radiative forcing. The earth "doesn't care" where the forcing is coming from, relatively speaking. It may provoke a different regional pattern, but the globe as a whole will heat up regardless of the source. |

|

blizzard123

Wishcaster

South County home; Columbia, MO college

South County home; Columbia, MO college

Posts: 117  Snowfall Events: 27.0"--2013/2014

Snowfall Events: 27.0"--2013/2014

<2"--2016/2017

-------------------

|

Post by blizzard123 on Feb 1, 2014 18:14:28 GMT -6

Csnavy, how much of the CO2 increase is actually anthropogenic (like directly from burning coal, natural gas, etc) versus partially from outgassing from the oceans?

|

|

|

|

Post by csnavywx on Feb 1, 2014 18:21:20 GMT -6

So why aren't we already baking off if those changes are so huge?

1) Ice sheets and the oceans act as a giant flywheel and slow down surface temperature changes.

2) Stefan-Boltzmann law (it takes more and more energy to increase the temperature one unit as it gets hotter)

3) We are very likely partially screening ourselves with aerosols from pollution and land use (as the chart suggests above).

Why hasn't the surface temperature increased since xxxx?

1) It actually has, but due to coverage bias (Cowtan and Way 2013) from satellites and thermometers, they have partially hid the increase (in the Arctic, Antarctic and Africa).

2) Natural ocean circulation variability (like the IPO/PDO, ENSO, Southern Ocean variability) can effectively store some of that heat (we see this happening in real time now) AND the rate in which it is stored varies over time.

|

|

blizzard123

Wishcaster

South County home; Columbia, MO college

South County home; Columbia, MO college

Posts: 117  Snowfall Events: 27.0"--2013/2014

Snowfall Events: 27.0"--2013/2014

<2"--2016/2017

-------------------

|

Post by blizzard123 on Feb 1, 2014 18:26:58 GMT -6

Friv and i were discussing in chat a couple weeks ago why CAGW is probably unlikely as it stands now, and we talked about how when the oceans near the poles warm, it melts the ice, but in the winter that open ocean releases that heat more effectively then when there is ice covering it, so the rise in heat content wouldn't become enormous

|

|

|

|

Post by csnavywx on Feb 1, 2014 18:43:21 GMT -6

Csnavy, how much of the CO2 increase is actually anthropogenic (like directly from burning coal, natural gas, etc) versus partially from outgassing from the oceans? At this point, virtually all of it. The oceans are acting as a huge brake on the system by absorbing fully 20-30% of what we emit. When we emit CO2, the partial pressure of CO2 in the atmosphere increases relative to the ocean. This knocks the air-sea interface out of equilibrium. If there is a higher partial pressure on either side of the interface, CO2 will flow from the area with higher partial pressure to the area with lower partial pressure. When you pop the top on your fizzy soda, the abundance (high partial pressure) of CO2 in the drink is wildly out of equilibrium with the air around it, and it comes out. In the case of the atmosphere, the situation is simply reversed. The ocean will eventually sequester most of the CO2 we emit, but this process takes a very long time (on the order of centuries to millenia). Temperature also affects the amount of CO2 the ocean can hold and it is affected much the way a fizzy soda is. If you heat up your soda, the fizz leaves much faster than if you leave it cold. This effect is expected to diminish the ability of the ocean to dissolve CO2 and increase the strength of the inversion in surface waters, making mixing with deep water slower. |

|

|

|

Post by csnavywx on Feb 1, 2014 18:46:48 GMT -6

Friv and i were discussing in chat a couple weeks ago why CAGW is probably unlikely as it stands now, and we talked about how when the oceans near the poles warm, it melts the ice, but in the winter that open ocean releases that heat more effectively then when there is ice covering it, so the rise in heat content wouldn't become enormous Correct. This strong negative feedback will help slow down the rapid ice loss we've seen over the past 15 years. Of course, the release of extra heat in the autumn and winter does affect the atmosphere above it (by thickening it and raising heights) and this is the subject of much study in climate science right now, since what happens in the Arctic definitely doesn't stay there when it comes to weather. |

|

|

|

Post by csnavywx on Feb 1, 2014 19:21:05 GMT -6

I realize I didn't source the radiative forcing graph. It's from the IPCC's AR5 report.

|

|

|

|

Post by guyfromhecker on Feb 1, 2014 22:25:46 GMT -6

Let me pull out my razor. Thank you for all the theoretic stuff. Thank you Mr. Occum for letting me borrow your razor. OK here we go.

Solar output was below 1364 from 1620 til about 1720. It was dreadfully cold. Things got a little better then it dipped back into that sub 1364 range again early in the 19th century. Bad luck then a stupid volcano blew up. Ouch! Still cold. Solar forcing recovers and it stays comfortably, on average above that nasty 1364 line and that little jump was enough to propel us out of the little ice age. WOW, without any big jump in CO2? A measly 1.3-1.5 watt jump did all that! Crazy. Low and behold the solar forcing goes up another 1.3-1.5 over the 20th century. Oh, that's not supposed to do much. Really?

The only way to break the fallacy of many bogus theories is time. We know so little about the whole process sometimes that time really tells. We still do not fully understand what a one watt change from the sun does. We have been too busy trying to figure everything else out first. Ya gotta know #1 before you figure out #2.

|

|

|

|

Post by guyfromhecker on Feb 2, 2014 8:30:01 GMT -6

Let's back to those sunspots and other proxies, which do correlate decently with solar output. According to data, we were just in the strongest solar maxima in the last 10,000 years during the 20th century. In fact, it was so far above the ones most recent, those over the last 8,500 years, that you could hardly put any of them in the same class.

What was going on the last time the sun was this active? We were busting out of an ice age. Of course this kind of solar forcing couldn't do that, could it?

Fact is there were several maximas back then that finally pulled us out. Only one of them appeared to be equal or greater then the one we just experienced.

|

|

|

|

Post by csnavywx on Feb 2, 2014 8:35:44 GMT -6

Let me pull out my razor. Thank you for all the theoretic stuff. Thank you Mr. Occum for letting me borrow your razor. OK here we go. Solar output was below 1364 from 1620 til about 1720. It was dreadfully cold. Things got a little better then it dipped back into that sub 1364 range again early in the 19th century. Bad luck then a stupid volcano blew up. Ouch! Still cold. Solar forcing recovers and it stays comfortably, on average above that nasty 1364 line and that little jump was enough to propel us out of the little ice age. WOW, without any big jump in CO2? A measly 1.3-1.5 watt jump did all that! Crazy. Low and behold the solar forcing goes up another 1.3-1.5 over the 20th century. Oh, that's not supposed to do much. Really? The only way to break the fallacy of many bogus theories is time. We know so little about the whole process sometimes that time really tells. We still do not fully understand what a one watt change from the sun does. We have been too busy trying to figure everything else out first. Ya gotta know #1 before you figure out #2. Errr, we have a pretty darn good idea what that does. Again, radiative forcing calculations are pretty easy. It's the response time and climate sensitivity that we have some questions remaining on. But please understand, if you're arguing for high temperature sensitivity to the sun's radiative forcing, this means climate sensitivity is on the high end (>1 W/m2*K). Again, it doesn't matter where the extra heat comes from, globally it will react the same way whether you turn up the burner or trap more heat by throwing a blanket over it. We know CO2's contributions to radiative forcing by a relatively simple calculation of the Planck temperature equation. The question has always been the strength of the associated feedbacks. Occam's Razor isn't going to solve that question. The Razor can be a useful heuristic tool, but it is not an irrefutable piece of logic or a scientific result. |

|

|

|

Post by guyfromhecker on Feb 2, 2014 13:33:47 GMT -6

OK, I'll cut to the chase and spare you some time. This is all about how I read the solar data. Like I said I am using the data from hereIf the above did not work citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.393.1008&rep=rep1&type=pdfCheck it out and follow the flow. From the mid 1600s to the early 1700s solar forcing was as weak as we have seen it. It was cold. The values were in the sub 1364 range. They rebounded to above 1365 and all was a getting better, for a bit. They dipped in the early 1800s and the little ice age continued. Finally the sun woke up and jumped up to about a 1365 average again during the last half of the 19th century into the 20th and we escaped the little ice age. It seems all would have been well then, I mean this 1365 was adequate to bring us out of the freezer and make things nice. One problem. The sun decides to crank up to 1366 for a century. Oh boy, look what that did. All this climate change from about a 0.2% total variation. This is really why we need a steady sun, relatively speaking. If it dipped below 1363 for any period of time we would be screwed with a capital S. If it stayed below 1364 for more than two cycles it would hurt a lot. If it jumped up to 1367, God knows what would happen. We live in a place that is incredibility unique. We are just the right distance from just the right power source. Of course it isn't always pretty, but the last 10,000 years or so have been liveable. There are no guarantees that comfort will always be there since the optimum is about impossible to hold with the little variation that is natural. My best guess with where we are in the Milankovitch stuff is that an optimum now would be somewhere around 1365. I would imagine that optimum varies in diffent times. Using our earlier equation to solve for the radiative forcing gives us an almost even 0.5 W/m2. Clearly significant, and as you've pointed out, it does make a difference in temperature. How does this 0.5 compare to other forcings? (Note that the timeframe on this chart is from 1750-2010, and 2010 when the sun was just coming off a deep min, hence the low value of solar forcing over the period).  Wait, what? 2.29 W/m2 increase from CO2 forcing and other gasses alone? Yeowch. Well, let's convert that back into an equivalent of solar irradiance, then compare. ((2.29)*4)/0.7 (to get rid of spherical geometry and take the albedo adjustment back out of the equation) = 13.08 W/m2. That's over 5 times the change from the bottom of the Maunder Minimum to today. Even if you're an extreme optimist and take the lower 5% level, we're still talking over twice the effect. If you're more of a pessimist and take the upper 5% confidence bound, it's 9 times as much. This is why scientists who work on the subject are worried. The reason I was saying to "be careful" is that there's an inevitable conclusion that if you argue for very high sensitivity from solar irradiance changes (solar radiative forcing). This means the climate is also very sensitive to other sources of radiative forcing. The earth "doesn't care" where the forcing is coming from, relatively speaking. It may provoke a different regional pattern, but the globe as a whole will heat up regardless of the source.

Wait, what? 2.29 W/m2 increase from CO2 forcing and other gasses alone? Yeowch. Well, let's convert that back into an equivalent of solar irradiance, then compare. ((2.29)*4)/0.7 (to get rid of spherical geometry and take the albedo adjustment back out of the equation) = 13.08 W/m2. That's over 5 times the change from the bottom of the Maunder Minimum to today. Please, do you really think that is like the same effect as the sun shooting up to almost 1380? I mean come on, we would be boiling by now. Isn't that enough to make you scratch your head? God, I would be worried too, but these guys have not a clue. |

|

|

|

Post by csnavywx on Feb 2, 2014 23:38:16 GMT -6

Just out of curiosity, what temperature reconstructions do you refer to when you say "very cold" in the Little Ice Age? Most of the reconstructions I see average a 0.2 to 0.3C difference globally on either side of that period. Nothing that comes close to the 0.6C increase in the last 35 years.

|

|

|

|

Post by csnavywx on Feb 2, 2014 23:45:19 GMT -6

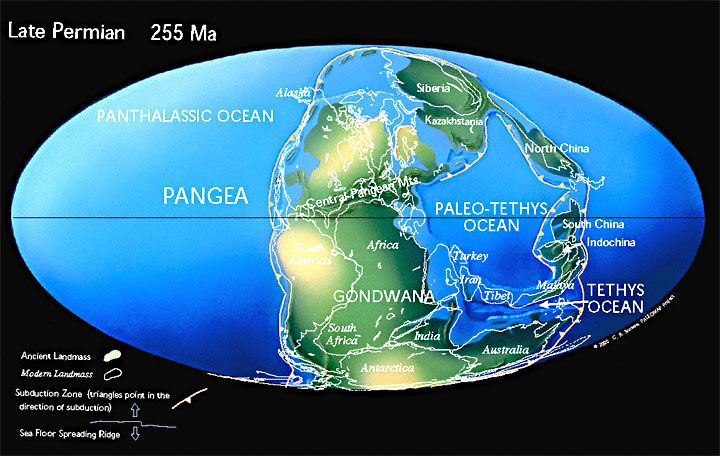

And no, 1380 isn't going to "boil us off". The sun was 3% fainter during the Permian and we didn't freeze into a snowball. In fact, we came out of a couple of "snowball" glaciations with 5-7% less solar luminosity than today.

Hell, the sun was 13-20W weaker during the age of the dinosaurs but we saw a world 6-8C warmer than today.

|

|

|

|

Post by guyfromhecker on Feb 3, 2014 9:00:42 GMT -6

Can you give me a reliable source for where you get the ancient solar values?

|

|

|

|

Post by guyfromhecker on Feb 3, 2014 9:42:41 GMT -6

I'm particularly interested in the Permian solar values

|

|

fareastwx21

Wishcaster

Clay County, Illinois on the banks for the Little Wabash

Clay County, Illinois on the banks for the Little Wabash

Posts: 189

|

Post by fareastwx21 on Feb 3, 2014 14:00:02 GMT -6

AR1967 has continued to grow in size and complexity. NOAA has boosted the probability of X flares to 50 percent. If the spot produces an flare it would likely be earth directed in the next few days.

|

|

fareastwx21

Wishcaster

Clay County, Illinois on the banks for the Little Wabash

Clay County, Illinois on the banks for the Little Wabash

Posts: 189

|

Post by fareastwx21 on Feb 3, 2014 14:04:05 GMT -6

Just out of curiosity, what temperature reconstructions do you refer to when you say "very cold" in the Little Ice Age? Most of the reconstructions I see average a 0.2 to 0.3C difference globally on either side of that period. Nothing that comes close to the 0.6C increase in the last 35 years. From my reading I thought the accepted value was colder by 1 to 1.5 degrees C. But not all of the Little Ice Age was cold, it seemed to be a period of much more turbulent swings in temperature, that was overall colder in many places such as Europe and the eastern part of North America. |

|

|

|

Post by csnavywx on Feb 3, 2014 18:35:05 GMT -6

Just out of curiosity, what temperature reconstructions do you refer to when you say "very cold" in the Little Ice Age? Most of the reconstructions I see average a 0.2 to 0.3C difference globally on either side of that period. Nothing that comes close to the 0.6C increase in the last 35 years. . But not all of the Little Ice Age was cold, it seemed to be a period of much more turbulent swings in temperature, that was overall colder in many places such as Europe and the eastern part of North America. Regionally, perhaps. Global data does not support that kind of drop at all. |

|

|

|

Post by csnavywx on Feb 3, 2014 19:00:32 GMT -6

Can you give me a reliable source for where you get the ancient solar values? I think this one covers the bases pretty well. It's more of a review or meta-analysis type paper. Of particular importance to this thread is the Yonsei-Yale stellar evolution diagram. The basic idea is beautifully simple: When the Sun fuses hydrogen, it creates helium, which is more dense, which sinks and collects in the core. Over long periods of time, this density increase causes the pressure in the core to rise, which also causes the temperature to rise, thus increasing the rate of fusion. This results in a slow increase in luminosity. At this point in time, the rate is approximately a 1% increase every ~110 million years (so we're talking ~2.5% for the Permian, I was slightly off there). |

|

|

|

Post by guyfromhecker on Feb 3, 2014 19:02:50 GMT -6

Navy could you tell me where you got the solar data for the long-lost times.

|

|

|

|

Post by guyfromhecker on Feb 3, 2014 22:22:31 GMT -6

Kinda hard to really compare Permian times to now. I mean it wasn't even close to the same world, so how can you compare it climatically? Who knows how solar forcing can even be compared between then and now.

|

|

fareastwx21

Wishcaster

Clay County, Illinois on the banks for the Little Wabash

Clay County, Illinois on the banks for the Little Wabash

Posts: 189

|

Post by fareastwx21 on Feb 5, 2014 8:49:54 GMT -6

From my reading I thought the accepted value was colder by 1 to 1.5 degrees C. But not all of the Little Ice Age was cold, it seemed to be a period of much more turbulent swings in temperature, that was overall colder in many places such as Europe and the eastern part of North America. Regionally, perhaps. Global data does not support that kind of drop at all. It is my understanding that the new data does support that kind of drop. journals.ametsoc.org/doi/abs/10.1175/2011JCLI4145.1With new proxy reconstruction I believe they came up with a value of close to 1.1 C drop during the "Little Ice Age" period. |

|